(Not so) Random Thoughts

Good Bye and Thanks to Hammarby IF

The season 2013 ended with the last game on the home stadium Tele2 Arena on November 2nd with a win against Östersunds FK.

It was a pretty rough ride this season with a coach change, new stadium and new training ground (currently in the making process). Unfortunately, we haven’t been able to qualify to play highest division in Sweden (Allsvenskan) this year, and I feel very sorry for this to all club supporters and players themselves. I wish all the best to the club and to the players, along with the great supporters in the upcoming seasons. Hammarby deserves to play in Allsvenskan, and Allsvenskan needs Hammarby and their supporters.

Unfortunately, it is time for me to move on. After the November, during which I will help the coaching staff with 4 week training block, I will head back to Serbia. I don’t have much in plan, but I am thinking about pursuing PhD and I have applied for two potential PhD positions in Australia and New Zealand. In the mean time I plan catching up with missed time with family and friends (everyone who works/lives outside of their homeland knows what I am talking about), training, reading, skiing and re-evaluating what could have been done differently and better.

Last two years were amazing and I am more than thankful to Hammarby, players, coaching staff and supporters for providing me opportunity and trust to work as head physical preparation coach. Will miss you all!

New Article on Velocity-Based Strength Training

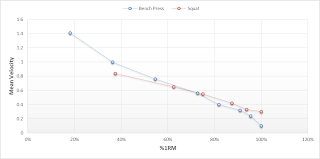

Together with a friend of mine and a co-author Eamonn Flanagan, strength and conditioning coach for the Irish Rugby Football Union, we have wrote the review paper entitled “Researched applications of velocity based strength training” and got accepted for publication in Journal of Australian Strength and Conditioning.

The paper will cover topics such as load/velocity profile, minimal velocity threshold, novel velocity/exertion profile, daily 1RM estimates, and using velocity start and velocity stops to prescribe strength training. All this with how-to in Excel. In short it should be very applicable for coaches utilizing linear position transducers (LPTs) like GymAware and Tendo unit. Hopefully it will provide great reference and a starting point for velocity-based strength training.

Pragmatic or Sport-Specific Approach in Exercise Testing and Evaluation?

I urge everyone to read the recent opinion and point-counterpoint article by Alberto Mendez-Villanuevaand Martin Buchheit in Journal of Sport Sciences: Football-specific fitness testing: adding value or confirming the evidence?, and great paper by Chris Carling (see the interview with Chris HERE) in Sports Medicine: Interpreting Physical Performance in Professional Soccer Match-Play: Should We be More Pragmatic in Our Approach?

I believe that the sport specific approach to testing and training has finally started to show its flaws, overseen by its supporters. It has also been a great selling point, for both books and job vacancies, pushed way too far. What about concepts such as human specific or training specific?

As a complementarist, I believe in importance of both sport specific and human specificapproach, where one needs to understand details and nuances of the sport and its demands and culture, but also need not to forget how humans in general move, adapt, learn, behave. To be completely honest, I believe that 50% of physical training is human specific, 30% is sport specific and 20% is individual specific. Just don’t put the cart before the horse and remember the Big Rocks story.

Taking this discussion to testing and evaluation field, a lot of coaches and researchers ask what is the sport specific test for a certain factor of success in a given sport? Without going into the discussion what, in this case physical factor is related to success (and how do you describe and quantify success), for example is VO2max related to distance covered and is distance covered to game outcome and season outcome (see the paper by Carling on this), there is the issue are these tests getting anything new on the table to be pragmatically used in training?

I cannot agree more on this with Mendez-Villanueva and Martin Buchheit. What we see is a bunch of testing batteries that only describes (quantitatively) what coaches already know intuitively. Who is the fastest guy, who is the most endurant, who is strongest etc.

This is absolutely not an opinion against testing in general, but against testing (and monitoring) that doesn’t have any pragmatic value and provide only descriptive quantification. For example, YoYo test is one of the most researched sport specific test in soccer, simulates the game, change of direction, short rests, and all that yada yada yada. But it gives you a distance that you cannot use in any prescriptive way at all. Ok, I know that one player increased from 2,400m to 2,600m in two months in YoYo test, but what type of actionable information does this gives me or any other coach except for comparing athletes (i.e. descriptive analysis)?

Again, this is not against YoYo testing overall, which can have great importance and value in descriptive roles (e.g. comparing teams, athletes or league levels), but against tests that don’t bring anything usable and actionable (pragmatic) on the table.

A lot of coaches ask me for advice what should be tested with their team. My first reaction is “How do you plan using that number”. My answer depends on their use of that number. I would say that if you don’t plan to use testing scores in any meaningful, actionable and pragmatic way, it might be just a waste of time, money and energy.

Taking this discussion one step further – we tend to focus on outcome or performance tests too much for both testing and monitoring. For example 40m time, vertical jump. Some of those can be used to prescribe training (MAS, 1RMs, etc), but we might miss the process underlying them that was responsible for a performance outcome. For example, different power output between legs in vertical jump, dip in vertical jump, etc.

Yes – we need specialized and expensive equipment for these, but they might tell us more about HOW certain performance is achieved. Human body is famous for system degeneracy. “Degeneracy is a property of complex systems in which structurally different components of the system interact to provide distinct ways to achieve the same performance outcome” — from Sports Med. 2013 Jan;43(1):1-7.

In other words, especially for monitoring of training readiness, adaptation and overtraining, having an insight HOW are things achieved, can be more informative than only how much someone run, jumped, lifted or throw. Besides they can give PRAGMATICAL information about what should/can be done to improve performance (prescriptive vs. descriptive).

In terms of monitoring for neuromuscular fatigue (NMF), a lot of coaches use jump assessment. They track jump height over period of time to see any meaningful drops in performance. What might happen and it might be very meaningful, is that even without change in performance (vertical height) athletes might use different process to achieve same score – using smaller dip, longer contraction time, etc that could be very meaningful in NMF assessment and even injury prevention.

I hope that this random though raised some questions – and that was the whole point of it. One more time I am not bashing testing in general, but testing without purpose and pragmatic value. Sometimes this pragmatic value is only descriptive, sometimes, and we should aim toward this, is more prescriptive. It should also give us some information that we don’t already know about the athletes and something that we can use to break the performance plateaus and prevent injuries.

Inferential Statistics for Coaches? Naaaah!

There is HUGE discrepancy between analysis and visualization for coaches and clubs and researchers and journals. What researchers and journals are interested about are is that “is there effect in the population (usually on average)”. Since researchers can’t measure the whole population (this is no Earth population, but certain population involved in research question, like elite rugby player, elder lifters, etc) they pick up smaller samples. Using inferential (inferential means providing estimates to population based on samples) statistics, researcher look if the effect in the sample have any statistical significance to a population. This is usually expressed with p value or confidence intervals using Null hypothesis testing (null hypothesis is that there is no effect in the population).

One example might be if cold baths improve recovery in soccer players. Since the question involves all soccer players, and researchers cannot test all of them, the take a random sample and create experiment research. One group (experimental group) get the cold bath treatment and other group (control group) don’t do it. Then they measure some performance estimate (e.g. vertical jump) or subjective feeling (rating of how sore or tire you are) and compare between groups. For an example, both groups had mean vertical jump of 44cm before treatment (cold bath). After treatment, experimental group improved to 50cm while control group to 46cm.

Most of the researchers are not interested in practical meaningfulness or significance of such effect (luckily with Will Hopkins and magnitude based inferences this is changing), but rather into something that is called statistical significance. In the case of cold bath, researchers want to see if the effect is significant in the population.

Using null hypothesis testing (null hypothesis being no effect of cold batch treatment) they estimate how probable occurrence of the effect is if the null hypothesis is true. This is called P Value (for more info check statistics books). Everything under P<0.05 has effect and usually gets published. There is no talk of magnitude of this effect – only if it is statistically significant (and that is VERY influenced by number of subjects – more money, more subjects, higher chance of seeing an effect and getting published and getting your research score).

All of this inferential statistic for researchers is interested whether there is an effect (on average) in the population. Even if there is an effect, the range of that effect might differ a lot. Remember the story behind averages? “Having my feet in the oven, head in the freezer, on average I am just fine”.

Coaches on the other hand are not interested into making inferences to a population. They are interested in the SINGLE athletes, not averages. No wonder they don’t understand inferential data analysis, because they don’t need it.

It is beyond me, why the sport scientists still present analysis and figures to the coaches using inferential statistics and figures (averages), along with using normality assumptions that are usually violated. Besides, inferential statistics is afraid of outliers, while in sport outliers and their discovery are of utmost importance.

Coaches are not interested does altitude training have an effect on average in the population of elite athletes, but rather will it work for John, Mickey and Sarah. Single case studies. Ranges in single case studies.

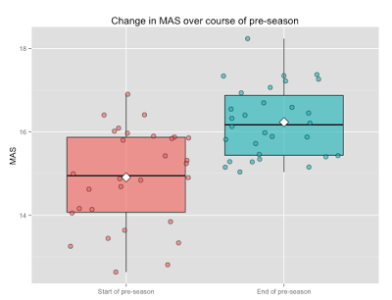

What I had in mind is to write a practical paper outlining the best methods for simple descriptive analysis and visualization techniques that coaches can use from day one. Using Smallest Worthwhile Effect (SWE), Typical Error (TE) and how to visualize them to get the idea of practical significance of the effect. I am currently in the process of collecting good visualization practices for this purpose and trying to put them to either Excel or R/ggplot2 (I believe R is going to be the choice) and write a review paper.

Anyone who might provide any help, or is interested in contributing is welcome to contact me.

Setting Goals and Stoicism

Take any psychology book or training book and it will talk about setting goals and goals classification to (1) outcome, (2) performance and (3) process goals.

Outcome goals are related to competition results, like “I want to be first in competition”, “We want to get over 50 point in the league”, etc.

Performance goals are related to, well performance improvements that could increase chances of acquiring outcome goals – “I want to improve my shooting percentage”, “I want to improve my 1RM for 2,5%”, “I want to improve my minutes per mile for 10 seconds”, etc.

Process goals are related to the journey and training. “I want to give my best effort, sleep well, and get that training done”, “I want to accumulate over 100 TSS units daily on a bike”, “I want to get to the gym 5 times a week”

I believe that we are too focused on performance goals and neglect the journey or process goals. Outcome and performance goals give us direction, but sometimes we cannot control reaching of them and can lead to a frustration, burnout, even if they give us purpose and direction.

Biology of adaptation is complex – we can vary in our reactions to training and adaptations. We can’t control a lot of things, beyond training hard and smart, sleeping extra, eating well, etc. If we focus on end-points, especially the end-points we cannot control it might lead to a burnout and disappointments.

Last year I was reading a lot about stoicism (one great book to consider is A Guide to Good Life by William Irvine) and it really influenced me, although it is guide hard to practice and fight the “inner Chimp”, but it a great philosophy.

Anyway, the following picture is a pure gold and based on stoic principles of control. I believe we should spend more time on setting process goals and actions we can control and which we enjoy (the journey) instead of tunnel-vision approach to training goals.

Set the important process goals (aimed at achieving certain outcomes/performance) and get to action. That is your control. You can’t control your opponent, how is your body going to react, referees, etc. It is beyond your control and thus not worth of worrying.

Responses