HIIT Conditioning: Planning Strategies – Part 1

This is a sequel to the HIIT Conditioning article series, called Planning Strategies, which consists out of two parts

In the first part, among other topics, I will focus on introduction to the Planning Strategies, Heuristics and Uncertainty, as well as the three types of analysis: Phenomenological analysis, Mechanistic (or Performance) analysis and Physiological analysis.

All contemporary planning strategies are based on assumption of predictability (certainty) and simplistic causal network.

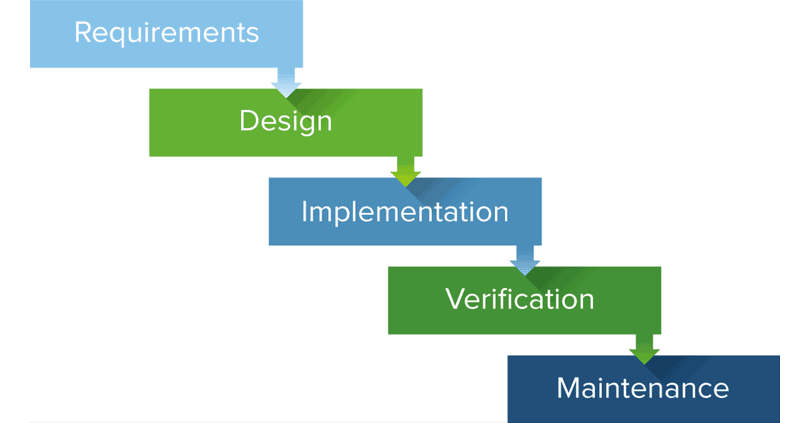

Assume that your Government gave you a huge budget to create new factory, as well as promised 200 skilled workers and 10 MBA experts, safe market for the product and tax deduction. You have 5 years to finish the project. You will certainly approach this project in a waterfall manner:

You will spend a few months making plans, acquiring all the needed licenses, creating budgets, recruiting engineers and then you will proceed with building things. Later equip them, create monitoring tools for workers. Everything in a very discreet, serialized manner.

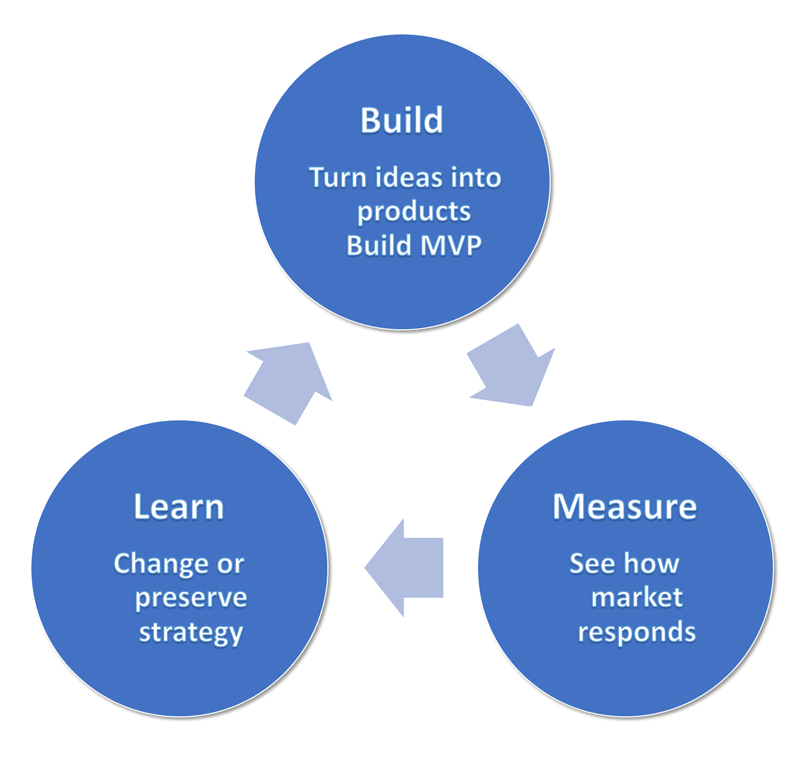

Let’s imagine another scenario. You recently got fired from your work, you have a family to sustain and house mortgage to pay off. You acquired some life savings and you are willing to invest that into a great new app you had in mind. How would you approach this project? Will you risk spending all of your savings on developing an app for two years that no one will buy or use? Or should you put something on the market as soon as possible, minimize the risk and maximize the learning what seems to be interesting to the market? You will develop MVP, minimum viable product that you will launch and see if your project has any future to start with. This process is outlined in Lean Startup methodology:

What is the difference between the two scenarios above? It is uncertainty of the second and bounded (constrained) resources. All contemporary planning strategies approach training as it is a factory to be built in a perfectly predictable scenario. The Agile Periodization paradigm I have been developing over the years (and still am) approaches training planning understanding and embracing uncertainties and constraints involved.

Which uncertainties, you might ask? There are plentiful, and they all propagate to your day-to-day decision making as a coach. Luckily there is a huge body of knowledge, regarding the most robust decision making in uncertainty. But let’s quickly cover the involved uncertainties (at least the known ones):

Measurement uncertainties

All performance measures have measurement error issues, which makes some more reliable, and some less. In plain English, some measure more noise than a signal (e.g. RSA measures are not good).

Models uncertainties

Our training models are based on outdated factor analysis models (which are also not exact) and anecdotal evidence, which created latent structure of motor abilities (constructs). This is very simplistic, and need to be understood as satisficing heuristics, rather than ontological realities. The underlying causal model of what causes what, might be very complex, and our simplistic reasoning might be especially flawed when working with elite athletes.

The general goal of such analytic approach is to identify limiting factors, rate limiters or determinants of performance. But these things are not as easily identifiable in complex human organisms and environments.

For this reason, I believe the way to go is to embrace both reductionistic analytical approaches, as well as complex phenomenological approaches. In other words, accept Apollonian and Dionysian approaches, or rational and intuitive, objective and subjective.

The chicken or the egg uncertainty

Most constructs and determinants of performance are actually performance derived. For example, we can derive MAS and MSS from two time trials (e.g. 100m and 400m). So, stating that improving MAS and MSS improves performance is a bit flawed, since they are actually derived from performance. Stating that improving performance to improve MAS and MSS works other way round. Friend of mine, extremely smart lab coat, commented that we cannot say that since flower grows, Sun shines, where he alluded that performance changes cannot precede physiological changes (excluding psychological improvements, better pacing, etc.), such as VO2max (not VO2peak though). I would tend to agree with that, although not necessarily with the VO2max notion, since there might be the third variable that precedes and forks to cause improvement in both VO2max/peak and performance.

It is easy to get lost into philosophy of causality (what causes what, and what precedes what). The point to remember is that things are not as clear and certain as lab coats want us (or themselves) to believe.

Prescription uncertainties

As you could probably see so far, rescription is not an exact science. There are numerous adjusting factors (e.g. start loss, COD loss), numerous ways to prescribe (using MAS, IFT, ASR and many others), numerous modalities (e.g. track, grass, hill, stairs, you name it). Taking this all together, it is too optimistic to assume optimal prescription, but rather satisficing prescription.

Intervention uncertainties

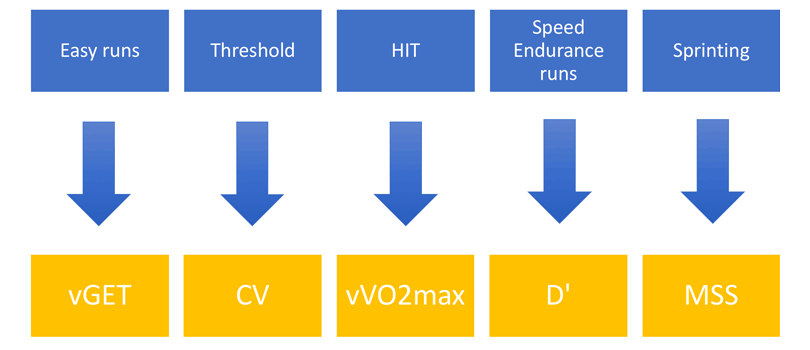

Since we have identified certain constructs and determinants of performance, we immediately assume that each has its own designated training intensity and training methods that helps improve it and hence improve the performance:

This is flawed. It is a warm comfort for our certainty and order seeking brains, but it cannot be further from the truth. We do not know in advance which training stimuli cause which adaptation in a single individual. We can get some estimates what will happen for the average athletes, but not for a particular athlete at hand. This is especially the case when dealing with elite athlete, at the brink of a new world record. For this reason, I despise the term evidence based practice, especially since it is based on garbage-in-garbage-out meta-analysis studies on students, motivated to get a passing grade. Better term would be best practices, which should come from tinkering in practice, or be practice based evidence. At least, it would be more honest and give coaches more realistic expectations.

Individual uncertainties

We tend to believe that by using relative loads (e.g. %MAS, %ASR, %1RM, %PeakPower, etc) in training prescription, we create equal playing field, where each athlete receives a load relative to his maximum (or potential). This is indeed a step forward from using the absolute prescription for everyone, but it is a SJW wet dream. There are unlimited metrics we can use to create same relative load, so it is futile even trying to do so. The goal is, once again, satisficing, or finding the good enough strategy and accepting the uncertainties.

Even with the perfect equal playing field, the needed stimuli athlete might need will differ between the individuals. For example, even it is relatively expressed, doing 15:15 at 120% MAS for 4 sets of 6 minutes, might be enough for Athlete B to cause adaptation, but too much for Athlete C. Put on top of that day-to-day variability in athlete readiness and receptiveness to training load, and we have a huge amount of uncertainty. We just do not know in advance how someone is going to react to a training stimuli. We do know the expected average reaction, but we never know how a single individual is going to respond and in which magnitude.

Situation uncertainties

There can be plenty of uncertainties. The very basic might be performing on the grass or a mud on rain and how will it affect the athlete’s performance, adaptation stimuli and hence response. The HIIT (or any other training for that matter) could be performed during the winning streak, or losing streak. The coach and the athletes might be under stress to perform due to the two last games lost. The coach might be under the careful eye of the board and must bring performance to realization soon, therefore, he must be pressured and afraid, so it is a short term performance, versus a long term development. This will affect the training.

Athlete’s attendance might be an issue. If you plan doing HIIT only on Wednesday, if the athlete misses that workout, he or she will miss that type of stimuli for 14 days, and the next time he or she does it, it will probably cause more potential issues, such as soreness and so forth.

There are indeed many other situation uncertainties that need to be taken into account when creating a training plan.

All of the above uncertainties interact and propagate further down the line of decision making. So, what should we do? Should we continue pretending that athletes are to be trained the same way factory should be built and follow the legacy of Fredric’s Taylor Scientific Management, or should we embrace the complexities, uncertainties and constraints of both human organism, as well as the context? If so, how?

Luckily for us, there is a great body of knowledge on decision making in uncertainty, starting from father of the modern Artificial Intelligence (AI) Herbert Simon (whose term satisficing I have been using from the very start of this article series), up to Gerd Gigerenzer and Nassim Taleb. What Agile Periodization aims to achieve is to approach the training from the complexity and uncertainty perspective rather than reductionistic biological perspective, and apply knowledge from other domains such as IT industry (e.g. Agile, SCRUM methodologies) and AI.

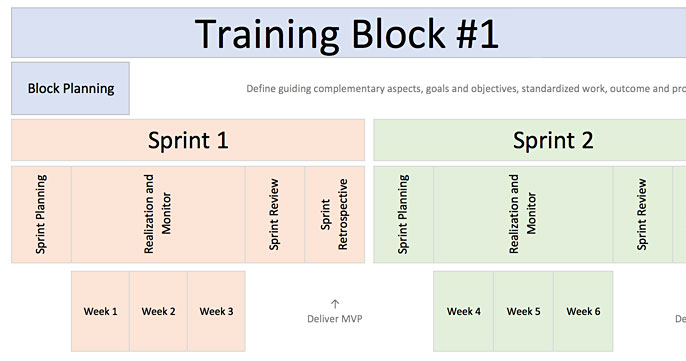

What follows are the Agile Periodization principles applied to HIIT planning.

Heuristics and Uncertainty

Imagine you have a certain amount of money you want to invest into stocks, with the hope of making a profit (and minimizing the risk). In 1990, Harry Markowitz received a Nobel Prize in Economics for his theoretical work on optimal asset allocation, trying to answer the practical question of “How to invest your money in N assets”. By analyzing historical performance of those assets, Markowitz was able to prove that there is an optimal portfolio that maximizes the return and minimizes the risk. The funny thing is that when Markowitz retired, he didn’t use his award winning optimization technique to invest his retirements, but relied on the simple heuristic, the 1/N rule: ‘‘Allocate your money equally to each of N funds.’’

Heuristics, as can be seen from the example above, are simple rules of thumb, that disregard a lot of information, and they don’t try to find the optimal solution, but the satisficing solution (good enough).

There is a considerable research that compares 1/N rule with optimization techniques, and the results indicate that optimization techniques are better when fitting to the historical data, but perform worse than 1/N when trying to predict the future. In statistics, there is a concept called overfit, where the model is great at predicting historical data (or data that was used to train the model), but perform miserably when the model is evaluated on the unseen data.

Why did 1/N perform better than Markowitz asset optimization technique? First of all, for Markowitz model to work, one needs a great amount of historical data (I might be wrong, but somewhere around 30 years). But the problem is not the tractability, but rather the predictability and robustness. Heuristics are more robust that optimization techniques.

It is important to know in which scenarios heuristics will perform better than optimization techniques. Optimization techniques perform much better at predicting the history (yes, I wrote that correctly – predicting, or retrodicting what already happened) and in situations of predictability. Heuristics perform much better in uncertain situations, where one cannot nominate events and calculate their probabilities. Gerd Gigerenzer has done a great amount of work in this area, and warned about confusing risk (known unknowns, where we can estimate the probabilities of events) and uncertainty (unknown unknowns, in which we cannot estimate probabilities). Nassim Taleb call this the ludic fallacy, or “Life is not a casino!”

Contemporary planning strategies assumed predictability and tractability (ludic fallacy) in devising optimal periodization models. Unfortunately, or luckily, due to many uncertainties involved, performance and performance planning belong to complex and uncertainty domain. If you remember the story from the beginning of this chapter, we shouldn’t approach training planning like we are building a factory in a predictable economy, but rather like building a startup with questionable market. Planning strategies should be more concerned with robustness, rather than optimization. To quote Gerd Gigerenzer: “When faced with significant irreducible uncertainty, the robustness of the approach is more relevant to its future performance than its optimality”.

Planning in performance domain revolves around answering the following questions:

- What should be done?

- When it needs to be done?

I will answer these two questions from uncertainty and robustness perspective, which is the basis of Agile Periodization, rather than from ludic fallacy of believing that situations and individual reactions are predictable (and that there is an optimal solution). Similar to Bayesian and Frequentists approaches in statistics (pardon my French), these two approaches can converge to the same solution, under certain constraints.

To answer “What should be done” I will turn to the concept of MVP, or minimum viable program ( it can also stand for minimum viable performance) and iterative planning. And to answer “When it needs to be done” I will turn to concept of microdosing (I believe coined, at least in performance domain, by sprint coach Derek Hansen). Concepts such as Nassim Taleb’s Barbell Strategy, as well as randomization needs to be covered, due to their importance in answering both questions.

Responses