Bridging AI and Sports Science: How Model Context Protocols (MCPs) and Retrieval-Augmented Generation (RAG ) Systems Can Personalize Training

Introduction: The AI Boom and the Lack of Practical Use in Sports Science

Over the past few years, large language models (LLMs) and chatbots have rapidly become part of our daily and professional lives. They write, summarize, code, analyze and increasingly they think alongside us. However, in the field of sports performance and coaching, practical applications of AI remain relatively underdeveloped beyond the surrounding hype, where many tools are labeled as “AI” despite relying on classical linear or logistic regression. Although these methods are technically considered machine learning models, their practical utilization is limited when compared to the capabilities of modern large language models (LLMs).

I was interested in exploring how these technologies – particularly large language models (LLMs – could support coaches and athletes not merely by entertaining or automating tasks, but by delivering genuinely practical value.

As a long-time enthusiast of both data science and strength and conditioning, I’ve been fascinated by how these new AI systems could become real coaching assistants rather than abstract tools. When Model Context Protocols (MCPs) appeared on the scene less than a year ago, I immediately saw their potential: they could serve as connectors between real-world data (e.g., training logs from AthleteSR, Strava, or Garmin Connect) and intelligent systems that interpret these data through the lens of sport science principles.

In this article, I’ll demonstrate how to build a system that integrates an MCP protocol for the AthleteSR software (which can also connect with the Strava app or any other fitness application supporting the REST API framework) with a Retrieval-Augmented Generation (RAG) system powered by influential research and theoretical frameworks from sport science. Together, these components form a multi-agent environment – one agent retrieves real performance data, while the other provides expert knowledge. The outcome is a chatbot capable of generating personalized training plans and offering evidence-based recommendations grounded in both the athlete’s monitored performance or wellness data and established theoretical frameworks such as Malden’s books Agile Periodization and High-Intensity Interval Training (HIIT) or similar.

Next, I’ll break down each component of the system (MCP and RAG) without delving into the technical details to much and then demonstrate how they work together in sync to generate answers to user questions.

Model Context Protocols (MCP): What They Are and Why They Matter

In simple terms, Model Context Protocols (MCPs) act as smart translators between data sources and AI models. They let large language models (LLMs) access and use live or external data – for example, pulling athlete information directly from AthleteSR – without needing you to manually copy or paste anything. MCP can be viewed as an AI agent composed of multiple tools. In agent-based systems, tools (sometimes called as decorators) are the core capabilities that allow agents to perform tasks and interact with users or other systems.

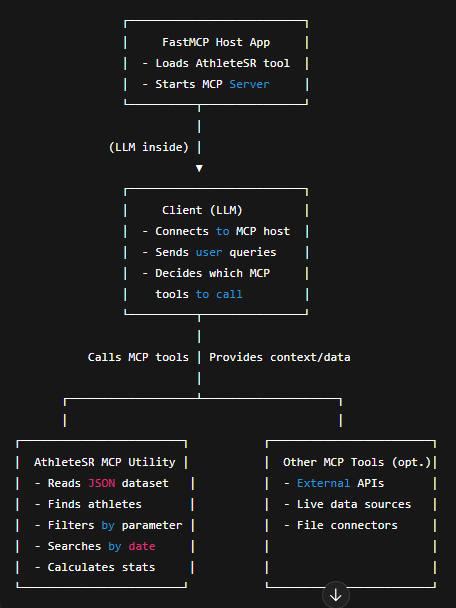

MCP defines a clear structure for communication between three main roles:

🖥️ 1. MCP Host

The host runs the MCP server and manages one or multiple MCP clients

In current setup, the whole server is hosted on FAST MCP cloud and Ai Engline calls the MCP server over URL and loads the AthleteSR decorators/tools and handles all communication between the LLM model and the MCP server.

🤖 2. MCP Client

Client is an agent or LLM application that uses this interface to request data or actions and receive structured responses. In this setup, the large language model (LLM) acts as the client and calls the server (tools).

It sends user queries to the host, decides when to call a specific tool, and interprets the tool’s output to create natural-language responses.

🧰 3. Server

The server is a web service that exposes data and provides context to MCP clients.

Each MCP tool performs defined tasks – reading data, filtering results, or computing statistics – and returns structured output to the host (in our case JSON).

In short, MCP acts as a bridge or as a “USB-C port for AI” between AI model and real-world data: a single, standard interface that allows any data source or tool to connect with any agent, without custom integration code.

It enables the model to fetch, process, and summarize information on demand while keeping everything structured, reproducible, and extendable as more tools or data sources are added in the future.

You can think of the workflow like this:

User → LLM (Client) → MCP Host → AthleteSR MCP Server → Processed Data → LLM → Output (summary or recommendation)

In this setup, the MCP handles all the behind-the-scenes communication between the data and the AI model. It ensures that the AI tool /chatbot or similar) always has the most relevant and up-to-date information when generating an answer (diagram below).

TL;DR

The MCP tool performs the following tasks:

- Receives user requests via the AI Engine Bot

- Calls the AthleteSR API in real time

- Fetches athlete’s monitoring data (e.g., wellness measures, training load, readiness ratings)

- Returns structured JSON to the AI LLM model, segmented by athlete, date range, parameters, or other dimensions.

- LLM creates answer taking in the account retrieved data

How the Main AthleteSR Tools Work

AthleteSR Data Fetcher

This function retrieves Morning Wellness data via an API, validates and cleans the event list, and returns a structured JSON response for the LLM. It ensures athlete data is present, removes empty entries, merges first and last names into a single player name, and returns an empty list with a warning if the data is missing or invalid.

AthleteSR By Player

This function performs a case-insensitive athlete name lookup, prioritizing exact matches and falling back to partial matches. It returns only the athlete data and an empty list if no match is found.

AthleteSR By Parameter

This allows case-insensitive searches by any parameter (sleep, mood, energy, or body pain), including numeric filters (e.g., >5, <=3.5). You can choose to get full athlete details or just their names.

AthleteSR By Date

This function handles Wellness Questionnaire events, using the Survey Start Date and supporting various date formats. You can query ranges like “last 4 weeks,” “this month,” or “between X and Y.” If no end date is provided, it defaults to the latest in the dataset, returning data for all players matching the date criteria.

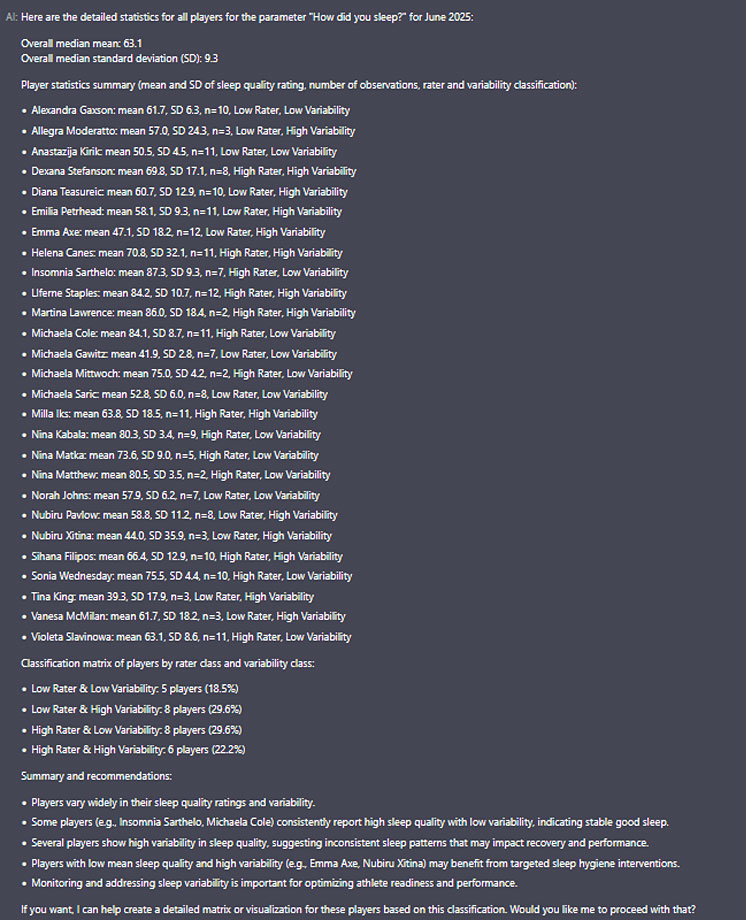

AthleteSR Parameter Stats

This one is most interesting and it calculates stats for each player – like their average and how much their scores vary (standard deviation) – for a chosen parameter. It then classifies players as:

- High or Low Rater (based on their mean vs. the group median)

- High or Low Variability (based on their SD vs. the group median)

If a player only has one data point it will be not consider for analysis. All results are rounded to three decimals for clarity.

RAG Systems: Giving the LLM Knowledge and Context

Retrieval-Augmented Generation (RAG) complements the MCP by giving the model access to domain-specific knowledge – in this case, books from Monitoring Trainings and Performance Data in Athletes. The RAG system retrieves the most relevant passages, so the LLM’s output is grounded in expert theory, not hallucination.

User Query → Vector Database (Books) → Retrieved Context → LLM → Response (complements the MCP response)

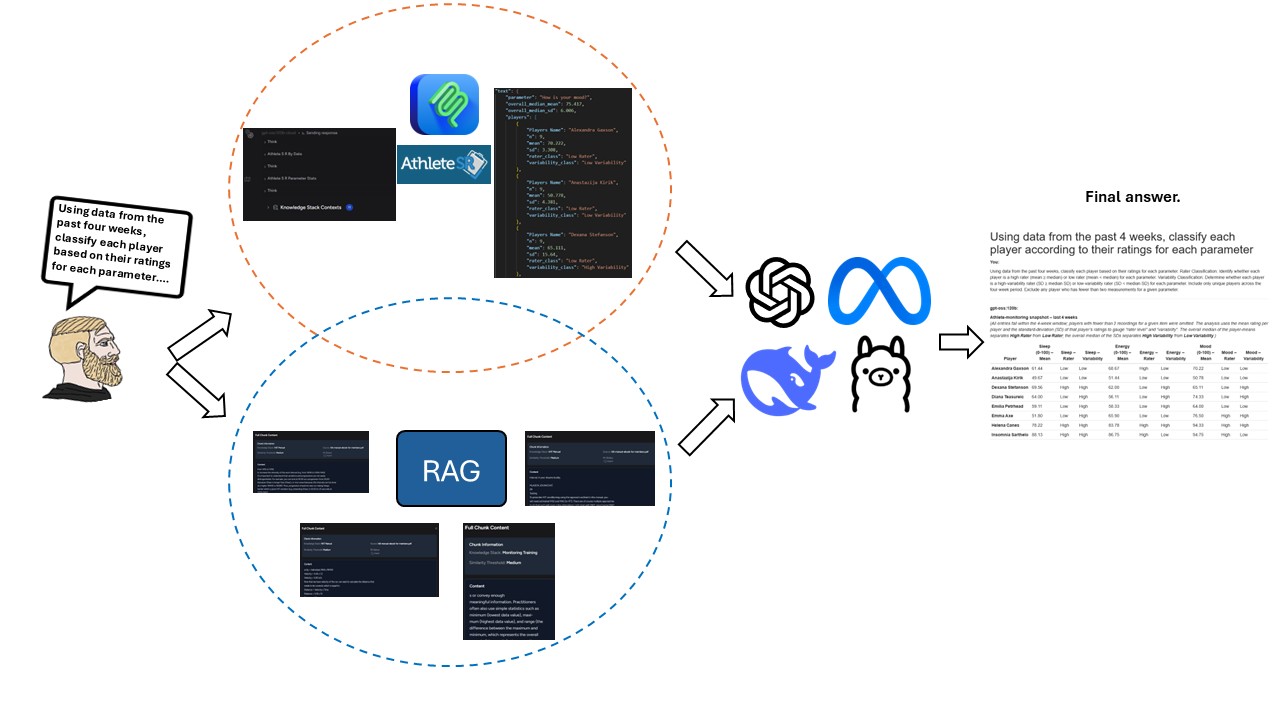

The Multi-Agent Setup: When MCP and RAG Work Together

- The user submits a question to the Chatbot

- The AI Engine forwards the request to the LLM

- The LLM selects the MCP tool to retrieve real-time athlete data from AthleteSR

- The MCP tool fetches personalized information (e.g., morning wellness data of female soccer players) and returns it to the model

- The user can ask follow-up questions, which trigger RAG retrieval from Pinecone Vector Store (textual chunks from a given book)

- RAG provides expert knowledge from relevant sources (e.g., Monitoring Training and Performance Data in Athletes)

- The LLM integrates the personalized athlete data and expert knowledge into a unified model, generating individualized recommendations based on the athlete, specified date range, and pertinent monitoring metrics

The figure below provides a visual overview of how the entire system operates.

Figure 3: The system uses AthleteSR data and a scientific monitoring framework to deliver personalized recommendations or summaries

Now, let’s see how this works in practice in the example below.

Practical example:

When I asked the chatbot to classify players based on the past 4 weeks’ sleep ratings -highlighting high vs. low sleep quality and high vs. low variability, and including only players with at least 2 measurements.

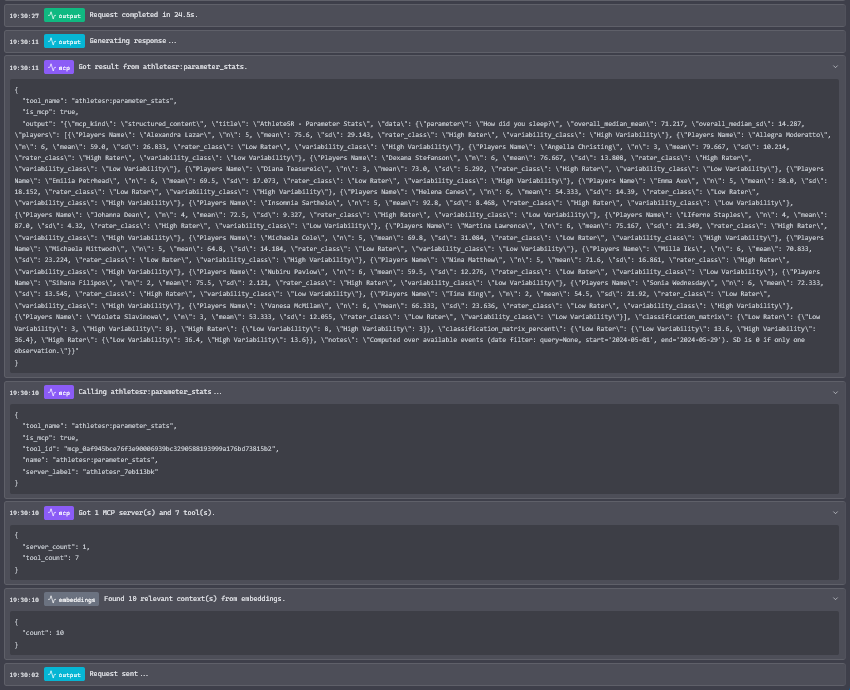

The athletesr:parameter_stats tool was triggered. It fetched and preprocessed the data for statistical analysis, returning structured JSON output, as shown below.

The AthleteSR Bot’s response to this query is shown below.

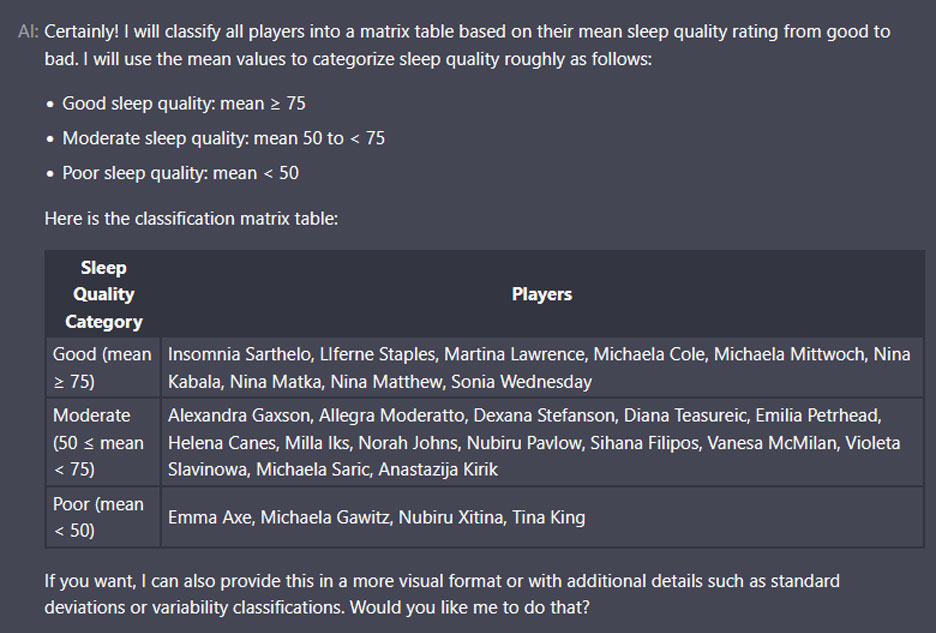

I then asked: Create a matrix classifying all players from good to poor sleep quality.

The response was:

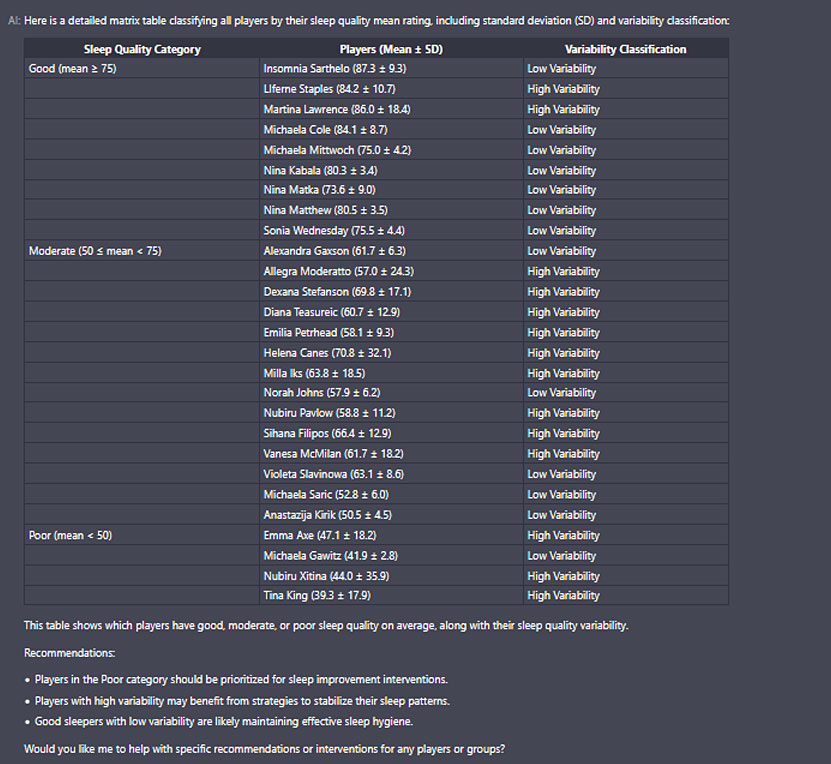

I requested additional details and the response was:

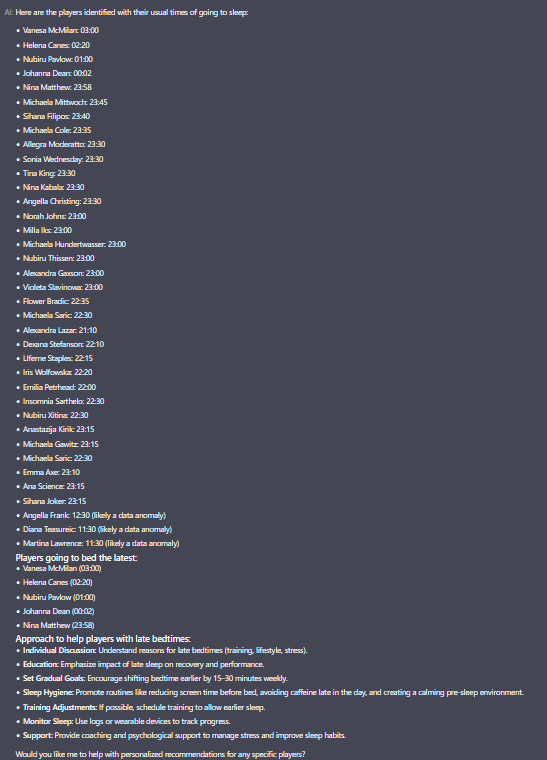

For the next prompt: Identify the latest sleepers, their usual bedtimes, and strategies to help them go to bed earlier.

The response is shown below.

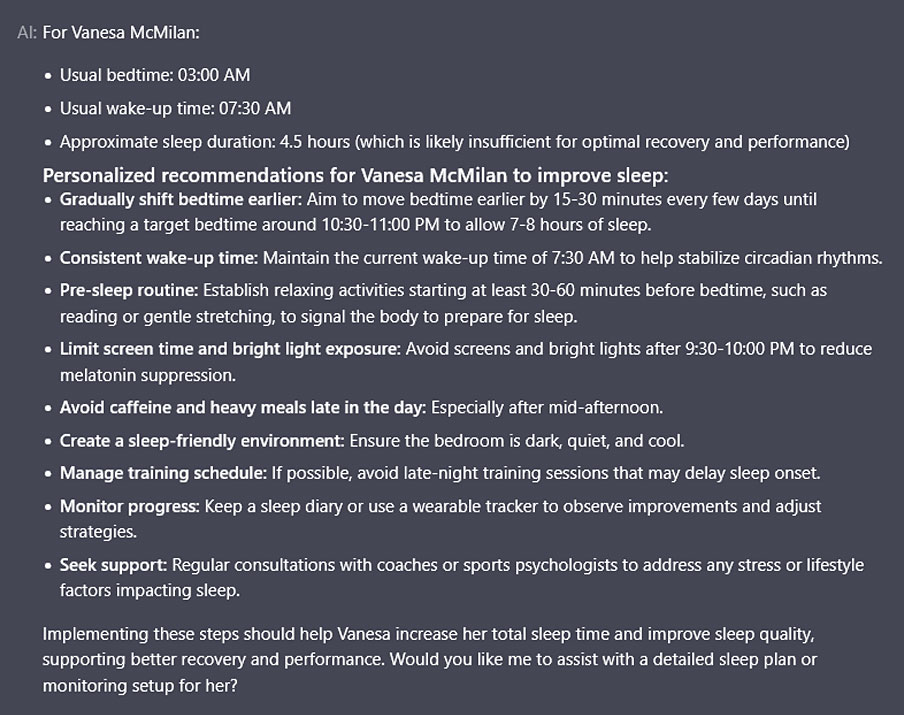

I then requested personalized recommendations for Vanesa McMilan, considering her wake-up time. The Bot generated these using the RAG system.

The next image below shows the full orchestration – the steps the chatbot follows to generate a response. It determines which agents or tools to use; here, the chatbot combined the 10 most relevant RAG embeddings with the athletesr:parameter_stats tool to answer the question.

Why This Matters for Coaches

The technology described above can serve as a practical tool that can change the way coaches plan, monitor, and communicate with their athletes. Below I break down the concrete reasons why strength and conditioning professional should start thinking about MCP + RAG as part of their coaching stack.

Personalized Training Plans Delivered at Scale

Traditional workflow

Coach extracts data manually from spreadsheets or any other data source, spends a quite time cleaning it, then writes a generic template plan that is later tweaked per athlete.

MCP + RAG workflow

Coach asks the chatbot: “Give me a 3‑week HIIT block for my midfielders that takes into account their sleep quality and readiness scores from the last 14 days.”

- The MCP tool pulls the latest sleep, mood, and readiness data for every midfielder.

- The RAG system retrieves the most relevant evidence-based knowledge from scientific sources such as books and peer-reviewed articles (e.g., when sleep duration is < 6 h, high-intensity training volume is reduced by 15 %).

- The LLM blends the two streams and outputs a fully‑customized block, complete with day‑by‑day load, recovery recommendations, and a short justification for each modification.

Result: A plan that already respects each athlete’s physiological state, with a clear scientific rationale that the coach can show to the athlete or the support staff.

Real‑Time, Data‑Driven Decision‑Making

- Immediate access to live metrics – Because the MCP calls the AthleteSR API on‑the‑fly, the coach never works with stale numbers. A sudden drop in an athlete’s energy rating can trigger a rapid reallocation of training load.

- Statistical context at the click of a button – In our example the athletesr:parameter_stats tool delivers group‑level means, medians, and variability in seconds, allowing the coach to spot outliers (high‑variability sleepers, chronically low mood) without manual spreadsheet wrangling.

- Evidence‑backed explanations – The RAG component pulls directly from the scientific literature (e.g., Monitoring Training and Performance Data in Athletes) to give the coach an evidence-backed why for any recommendation. This eliminates the gut‑feel gap that often leads to push back from athletes or administrators.

Enhancing Athlete Engagement & Ownership

- Transparent reasoning – When an athlete receives a recommendation that says: Your average sleep quality this week is 5.2, which places you in the Low Rater group. Therefore we’ll reduce your high‑intensity volume by 10 % this week (based on scientific knowledge of RAG system) the athlete can see the data and the scientific basis behind the decision.

- Interactive follow-ups – Because the chatbot remains within the conversation context, coaches and athletes can ask questions such as, “What can I do to improve my morning wellness score?” and instantly receive a personalized, evidence-based sleep hygiene checklist.

- Goal‑setting integration – Coaches can ask the system to track progress toward a specific target (“Raise the teams’s average energy rating from 6.2 to ≥ 7.0 over the next 4 weeks”) and the LLM will automatically surface weekly compliance tables and suggest corrective actions.

Conclusion

The convergence of Model Context Protocols (MCPs) and Retrieval‑Augmented Generation (RAG) marks a decisive shift from static, one‑size‑fits‑all training prescriptions toward personalized, evidence‑based coaching. By allowing large language models to pull live performance data and push domain‑specific scientific knowledge into the same conversational flow, we finally have a tool that can:

- Read the athlete’s current state (sleep, mood, readiness) directly from the monitoring platform.

- Interpret that state through the lens of up-to-date sport science research.

- Write a clear, actionable recommendation that the coach can trust, share, and adapt in seconds.

Current State of the Project

- Proof‑of‑concept proven – The demo detailed in Sections 2‑4 shows a fully functional pipeline that transforms a raw JSON payload into a nuanced training matrix, complete with statistical classifications and literature‑backed justifications.

- Tool‑agnostic architecture – The MCP specification is a universal “USB‑C for AI” allowing any future data source (e.g., wearable EEG, metabolic carts) to be seamlessly integrated and used alongside other contexts.

- Robust knowledge base – By indexing peer‑reviewed textbooks and key research articles into a vector store, the RAG component reduce hallucination and grounds every output in validated science.

Planned Next Steps

Over the next several months the system will evolve from a functional demo into a versatile coaching platform. The first priority is to broaden the suite of MCP tools so that the chatbot can answer a wider range of statistical questions.

Each new tool will be built using the same MCP specification, which means any sports‑science expert-whether a physiologist, biomechanist, or data analyst can propose a metric, supply a small API wrapper, and instantly make it callable by the LLM. This open‑ended architecture invites collaboration across disciplines and ensures the platform stays responsive to emerging research and team‑specific needs.

On the knowledge side, the RAG component will be enriched beyond the core textbook chapters.

In sum, the near‑future plan is to expand the analytical toolbox so that any conceivable performance metric can be queried, and to deepen the knowledge base so that the system draws from a rich tapestry of scientific literature and real‑world coaching experience. This dual expansion will keep the platform flexible, evidence‑rich, and useful for a broad community of sports‑science professionals.

Final Thought – AI as a Partner, Not a Replacement

Coaches bring a wealth of tacit knowledge: team culture, tactical nuance, personal rapport, and the ability to read a locker‑room atmosphere – qualities that no LLM can replicate. MCP + RAG simply extends that expertise, handling the grunt work of data retrieval, statistical summarization, and evidence‑backed justification. When the coach remains the final decisionmaker, the synergy yields:

- Higher accuracy – Decisions are grounded in up‑to‑date measurements and peer‑reviewed science.

- Greater efficiency – Hours spent cleaning spreadsheets become minutes spent on strategic planning.

- Stronger athlete trust – Transparent, data‑driven recommendations are easier to buy into.

In short, the coach becomes a human‑AI orchestra conductor, turning a chorus of raw numbers and research papers into a harmonious, personalized training plan that adapts in real time.

Call to Action:

If you’re a coach, sport‑scientist, or technologist eager to test the limits of this approach, start by deploying a simple MCP endpoint for your existing monitoring platform and insert one chapter from a trusted textbook into a vector store. Ask the chatbot a real‑world question (e.g., “How should I adjust the sprint volume for my sprinters given their last week’s fatigue scores?”). Observe the output, share your feedback, and iterate. The future of coaching is already here—your next step is simply to take it.

Responses