Intermittent Endurance 20-10

I have just finished the beep test and after an ALPHA test I did on myself I decided to share it and await feedbacks.

How it’s done?

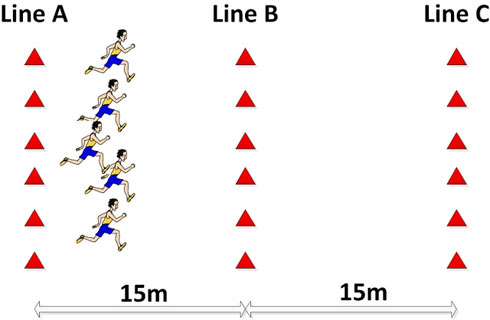

The test is done in a shuttle arrangement (30m) – see the picture.

Each run is done in the format – 20sec run, 10sec passive rest (or walking to the nearest line). Each speed stage is repeated 4 times in a row, before proceeding to the next stage where the speed increases for 1km/h. Athlete should cross the lines A and C with one foot on the signal (make sure to turn facing the same direction to use both left and right leg) and cross the line B on the signal as well.

When the athlete doesn’t manage to be on the signal at the line 3 times in a row, the test is stopped and the score is the last fully completed level. In the case when the athlete doesn’t want to continue during the 10sec passive rest, the score is also the last fully completed level. In this case there is a slight difference between player who didn’t even begun the next stage and the one that sucked up and tried one more before being disqualified. If you really want to nit-pick about it, you can track time at which the player stopped and calculate the in-between velocity.

As you can see the test has a lot in common with IFT30-15 by Martin Buccheit. I have actually utilized same beeps as Martin.

Why it is done?

The IE20-10 is designed with team sport athletes in mind. I have written about the importance of high-intensity running-based conditioning (HERE and HERE) and this test allows you to utilize the score to design and individualize the running based conditioning. You can find more about this process in the great article by Dan Baker HERE.

The test for that purpose should be TRAINING SPECIFIC not sport specific. If you plan utilizing long runs, the better test is MAS test. If you plan utilize short and intensive shuttle runs (like 15-15, 30-30, etc) then test designed for that specific purpose is a must.

Why do we need the new test?

I have designed this test (this is a beta test so I might re-design it) with the goal to overcome some difficulties I have identified with another tests.

MAS test – Usually done as time trial (1500m) or for 5-6min. MAS is the average velocity. The problems with this test (taking intermittent conditioning that will be based on it as a criteria) is that it is continuous, without change of direction (COD) and because it has pacing issues with athletes who are not used to this type of running (most team sport athletes). Pacing is a real issue and usually the score can get a lot better with improving pacing (this goes back to develop vs. express concept). Another issue is that athletes who scored the same might have totally different reactions to intermittent conditioning (see Martin Buchheit work along with this PAPER).

YoYo Intermittent test – The problem with YoYo in my mind, although it has showed that has validity to differentiate between levels, is that it doesn’t give you the speed at which the athletes should train. Yes, you can see who is better and you can gauge the improvement, but how to design individual running session? Hardly. The problem is that the distance is held constant (20m back and forth) along with rest, while the speed increase that makes the shift in work-to-rest ratio.

UMTT (Université de Montréal Track Test) – As far as I know UMTT is one of the first beep tests if not the very first. There was a continuous variation, done on 400m track (and a newer form called Vam-Eval), and beep variation done in shuttle arrangement. Flaws are similar to MAS test except that there is no pacing issue.

IFT30-15 – I find Martin’s test the best of the mentioned. One of the things that ‘bothered’ me is that is start too slow, and each stage increase for 0,5km/h, which makes it long to finish. Compared to YoYo, you need to improve for one stage only to reach SWC (Smallest Worthwhile Change – I am still learning about this). The reliability studies showed high ICC (and low CV) (I think they are done in this PAPER), but I still feel that there is a great possibility for error and bias – you slightly let go of an athlete and he reaches higher stage and go over SWC. I can’t talk too much about the sensitivity, because I am learning about this area myself, but if we compare % change – Yoyo tend to improve more than 30-15IFT, and if we compare ES (Effect Size) they tend to be the same. Martin explained this to me over email and warned against using %change solely to judge test sensitivity. To judge test improvement one should also take into consideration test reliability.

Since Martin is world known researcher I cannot argue with him on the statistics (he would destroy me 🙂 ), but I still somehow feel that there is a room for improvement in the test design. That’s why I developed IE20-10.

How did I calculate the beeps?

Well – manually and with some help of Excel. I started with average speed…

Responses