Thoughts on Injury Prediction

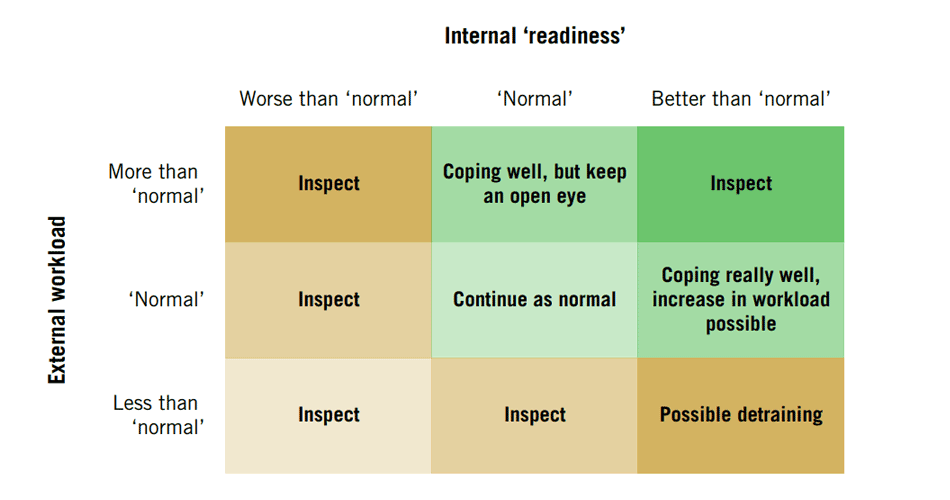

In the following video, I am discussing the famous “J” curve in injury prediction, as well as simulate some data to show how that curve is estimated. I also show the distinction between association and prediction, as well as how to make training decisions based on the different costs of committing false positive and false negative errors.

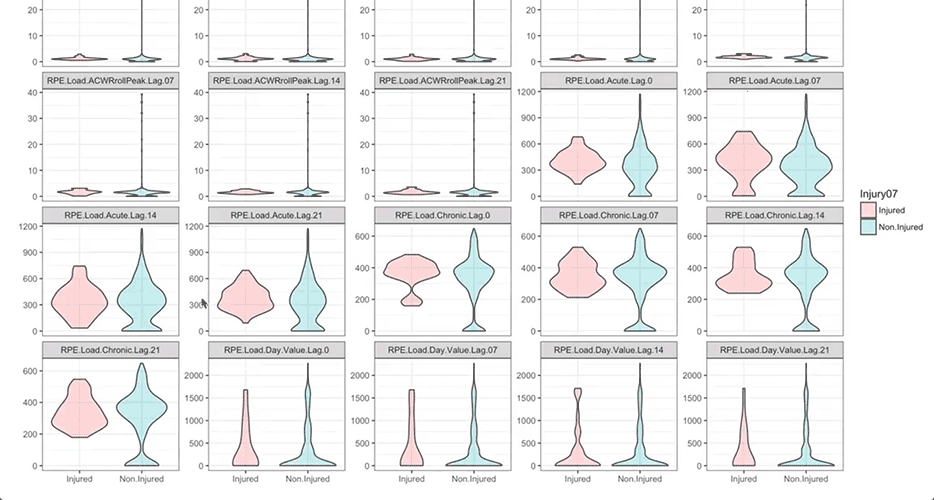

The point of the video is to pinpoint to the lack of practical usefulness (for intervention) of the current injury prediction models/research: (1) we lack causal proof; we only have association from observation data and (2) the predictive performance of the models is bad (AUC below 0.7) which makes it hard to set up the threshold for intervention (without going into Lucas Critique issues).

Here is the video. Enjoy watching and I look forward to comments/critiques.

Note: In the video, I’ve said that by changing threshold only the confusion matrix off-diagonals changes, which is obviously wrong. The point to made clear is that accuracy (correctly classified injuries) is shitty performance model, because it depends on the threshold. What we need to use is ROC and Area Under ROC curve because it is not affected by the threshold selected.

Complementary Training members can find and download the R Script below.

Responses